All you ever wanted to ask about AI taking over the world. Spoiler: The role of consciousness is fundamental, but it is not the only thing. What is the AI doomsday scenario? A dystopian narrative of a future where humanity is threatened and even extinct by AI. How would that happen? In a worst-case scenario, AI will at some point become... Continue Reading →

We have a choice each time we automate

(This article was originally published in Swedish by the Swedish daily Svenska Dagbladet on April 23, 2023). The development of AI is currently progressing at an unprecedented pace. In the colorful debate that sways between enthusiasm and warning, however, the most relevant question is seldom asked: what do we want with AI and automation? Perhaps not unexpectedly, it is... Continue Reading →

Vaccines are here—now, what will be The New Normal?

With the arrival of covid vaccines—earlier than most expected—there is hope that we will soon see the pandemic slow down. This brings the question of “The New Normal” to the fore. Here’s a futurist’s perspective. Since the first months of the pandemic, when it became clear that it acted as a powerful catalyzer on already... Continue Reading →

The world is changing. Is your strategy future-proof?

You are sitting on a board of directors. And like so many others in your position, you may want to devote more time to strategy work. Because while digitalisation is driving a gigantic market shift, you are asking if your strategy is future-proof. Good question. Digitalization and AI are driving what arguably is the greatest... Continue Reading →

Reality check: What is AI, approaching 2021?

Holding lectures on future and digitalisation, and on how to understand the change that is coming over us, I often meet the question about what AI is. Can machines be intelligent? How intelligent is AI? How far can AI reach? Here’s my view, as we are approaching 2021. Let’s first have a look at why... Continue Reading →

Here’s the key to trumpism and how to go ahead—a futurist’s perspective

Some people still struggle to understand the strong support for president Trump. Others fight about which narrative on the presidential election 2020 is true—did Biden win or was the election "stolen"? From my perspective as a futurist, the key insight is another. The political movements we have been seeing in the US and in other... Continue Reading →

Covid-19 is possibly caused by an attack on the body’s oxygenation system

There are some interesting observations on the Covid-19 disease revealing that it doesn't appear to be normal pneumonia or ARDS (Acute respiratory distress syndrome), but a failure of the body's oxygenation system, due to an attack by the virus on the hemoglobin, transporting oxygen and CO2 through the blood. Check the video below with observations... Continue Reading →

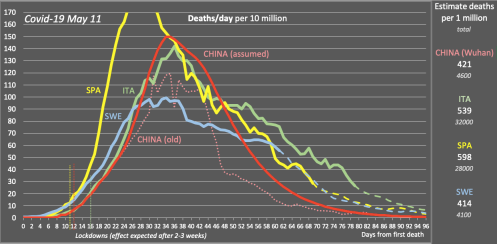

Rough forecasts for Covid-19 in 12 countries (regular updates)

UPDATE May 11: All 12 countries in the forecast are now at about 5 deaths per million per day or lower, Sweden being highest and most behind. In the beginning of June, all countries will probably be at 1 death per million per day or lower. What will happen when lockdowns are being lifted is... Continue Reading →

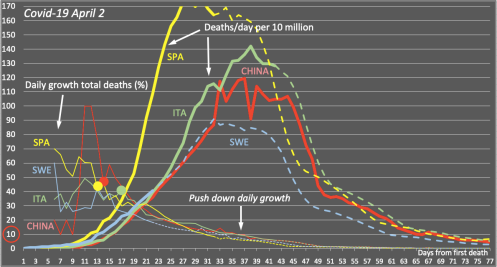

Update on Covid-19: Sweden remains below Italy, Spain’s forecast greatly improved

In an earlier post I tried to give a picture of the situation of the Covid-19 pandemic in Italy, Spain and Sweden, on March 26. Here’s an update from April 1st. Note that I'm not an epidemiologist, but I know mathematics and I’m showing what the data might tell us. The forecast is based... Continue Reading →

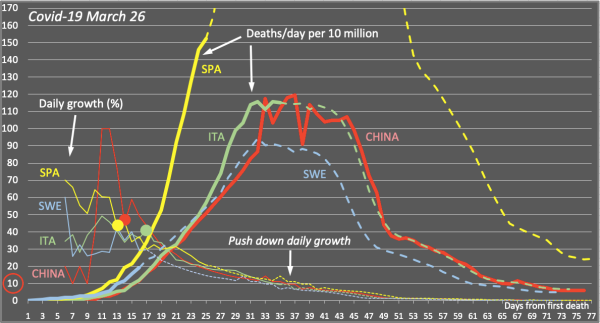

Covid-19: We have to push down daily growth below 10 percent

How is Sweden doing in the Covid-19 pandemic? Will we manage without a lockdown, in contrast to many other countries? The short answer: Possibly yes, IF we continue to slow down the spread of infection. The long answer: Let's have a look at what the curves tell us. Firstly: The number of total confirmed cases... Continue Reading →

Here’s why meetings and events are becoming increasingly important

Many seem to agree that meetings and events are becoming increasingly important today. But exactly why are they becoming more important? Our gut feeling goes a long way to answer the question, but if we want to make the right decisions in a world that is changing at an accelerating pace, it may be good... Continue Reading →

Ten reasons that the future is happening right in front of you

Obviously we don't know anything about the future. And bothering about the future is not what you're doing all day. Yet, you want to be prepared. So ask a futurist. But not even a futurist will know. So what could the futurist tell you? Well, to look around at what's happening. Because the future is... Continue Reading →

The Future of the Nation-State

How the nation-state can find a way through digitalization. Note: This essay is published as chapter 17 in the book Digital Transformation and Public Services: Societal Impacts in Sweden and Beyond. The book is the final report from The Internet and its Direct and Indirect Effects on Innovation and the Swedish Economy—a three-year research project funded by The... Continue Reading →

Eight + one megatrends you need to check out in 2018

A new year has just begun, with phenomena and trends that were mere ideas just a few years ago. Change will never be as slow as today, and therefore it is important to look up to see the big picture. Here are eight megatrends, plus one upcoming hot topic, you need to keep an eye... Continue Reading →

Let’s talk about TRUST—in finance and in business

[Presentation at a launch event of a new book on FinTech in Sweden, see below]. Trust is a funny thing. It's a very human condition, which most people would consider absolutely essential in financial services and in business. Yet, we don't reflect much on what trust really is. Most often it's a gut feeling, not... Continue Reading →

Seven Things You Should Know to Be a Digital Winner

It doesn’t matter whether you’re a doctor, a bus driver, a lawyer, an economist, an industry worker, a salesman, a trader, a teacher, an engineer or a customer service agent—your job won’t exist in a decade or two. Not in the form it exists today. The reason is that machines are getting better and better... Continue Reading →

Nine Things You Should Know to Be Smart About Driverless Cars

Just a few years ago, not many people realized what digitalization of transportation would be. Now, autonomous cars are the talk of the town, and carmakers, tech giants, and start-ups are racing to stay ahead in the mercilessly competitive transformation of the mobility industry. It could turn out to be the most profound of all... Continue Reading →

Eight Things Retailers Should Know to Be Digital Winners

The digital transformation of retail has been going on for some years now, and as a retailer, it’s easy to become worried over how to remain competitive in such a fast changing environment. The bad news is that this change still has a long way to go. The good news, however, is that you shouldn’t... Continue Reading →

Seven Things Lawyers Should Know to Be Digital Winners

Many lawyers and law experts worry about how their professional opportunities are changing with digitalization, observing how some daily tasks are already being taken over by digital automation and artificial intelligence, AI. And yes, the ongoing change in the legal sector is significant, and moreover, it only just started. The good news, however, is that... Continue Reading →

Been a bit busy—will soon be back

For various reasons—mostly positive—I have been a bit busy the last year and not so active on this blog. In particular, I have dedicated a lot of my time to following the ever increasing flow of interesting news on technology and its implications on society, organisations and individuals, continuously sharing much of this on my... Continue Reading →

Announcing the New Energy World Symposium

Note: The New Energy World Symposium has been re-scheduled to June 18-19, 2018. Read more here. § Today I'm announcing the New Energy World Symposium that will hold its first session on June 21, 2016, in Stockholm Sweden. The conference will focus on the disruptive consequences of a new cheap, clean, carbon-free and abundant energy source—LENR or Cold Fusion—that may... Continue Reading →

Give your organisation an injection of inspiration on digitisation

As I give talks on future and technology—or rather before I give my talks—I often meet people saying they feel that we're entering a time of big changes, and yet they seem to have difficulties in defining exactly what this change is. They might refer to the fast uptake of smartphones, tablets and social media, but... Continue Reading →

One step closer to long distance private drones

PhD student Andrew Barry from MIT’s Computer Science and Artificial Intelligence Lab has developed a detect-and-avoid system that lets drones fly autonomously through a tree-filled field at a speed close to 30 mph. The system operates at 120 frames per second and is running 20 times faster than existing software. In Sweden, flying out of sight with... Continue Reading →

Former Skype founders rethink local delivery

Former Skype founders Ahti Heinla and Janus Friis aim at reshaping local small scale delivery with the company Starship Technologies and this small autonomous device, moving at four miles per hour. The plan is to offer local delivery from the grocery store or retailers, or last-mile delivery of goods arriving to local hubs with ordinary carriers,... Continue Reading →

Swedish scientists claim LENR explanation break-through

Essentially no new physics but a little-known physical effect describing matter’s interaction with electromagnetic fields — ponderomotive Miller forces — would explain energy release and isotopic changes in LENR. This is what Rickard Lundin and Hans Lidgren, two top level Swedish scientists, claim, describing their theory in a paper called Nuclear Spallation and Neutron Capture Induced by Ponderomotive Wave... Continue Reading →

Jobs will go away… but not work!

With digitization and automation jobs will disappear, but we'll continue to work anyway. That is what several experts I have been talking to believe. They also think that distribution of wealth in society could become the biggest challenge ahead. None of the experts I have been talking to about jobs and the future is opposed to the picture that... Continue Reading →

The Italian edition — Un’invenzione impossible — is finally out!

(This post was originally published on Animpossibleinvention.com). I'm happy to announce that the Italian edition of my book An Impossible Invention — Un’invenzione impossible — is finally out. I’m particularly satisfied since the story is closely related to Italy to which I have personal connections, my wife being Italian. A great thanks to Alex Passi who has made the... Continue Reading →

Rossi has been granted US patent on the E-Cat — fuel mix specified

(This post was originally published on Animpossibleinvention.com). On August 25, Andrea Rossi was granted a patent on his LENR based heating device the E-Cat. The patent, which has the filing date March 14, 2012, can be downloaded here: US9115913B1 As far as I understand, the patent describes the so-called low temperature E-Cat that Rossi showed in semi-public... Continue Reading →

Watch out for fintech – banks need to wake up!

Stockholm is singled out as the world’s hottest spot for startup companies in the financial and banking sector–Fintech. Now experts are warning big banks for not being sufficiently innovative. “Sure, certain banks are technology mature, but I do not know if that should be called innovation. What has your bank done for you in recent... Continue Reading →

Here’s Swedish LENR company Neofire

This post was originally published on Animpossibleinvention.com Apart from the well-known companies with LENR based technology, such as Andrea Rossi’s partner company Industrial Heat, and Brillouin Energy, founded by Robert Godes, there are a series of small rather unknown companies that have popped out in the last few years. One of them is Swedish Neofire... Continue Reading →

What to learn from an historical cold fusion conference — ICCF19

This post was originally published on Animpossibleinvention.com. Last week, the international conference cold fusion, ICCF-19, was held, and I would argue it was historical, for several reasons. The first is the ongoing trial by Rossi's and his US partner Industrial Heat of a commercially implemented 1 MW thermal power plant based on the E-Cat.... Continue Reading →

Will LENR reach mass adoption faster than any other tech?

This post was originally posted on Animpossibleinvention.com and on E-Cat World. You often hear that new technologies spread to reach global mass adoption at an ever increasing speed -- from electricity, telephones, radio and television to PCs, mobile phones and the web. The hypothesis seems accurate and also reasonable, given that the world is getting increasingly... Continue Reading →

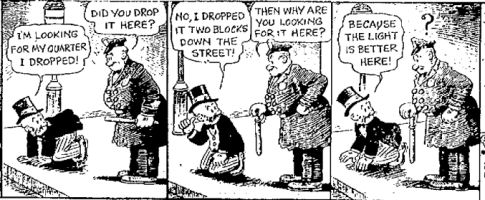

Time to dispel the streetlight paradox of energy

This blog post was originally posted on Animpossibleinvention.com. The current development in LENR, where things seem to be moving fast towards confirmation of a new energy source, could finally open a way to dispel what I call the streetlight paradox of energy. It's about time. You've probably heard the joke about the drunkard who is... Continue Reading →

It seems big banks know about cold fusion

(This blog post was originally posted on Animpossibleinvention.com). The oil price keeps falling. And most analysts seem convinced that they know the reason -- it's about supply, or demand, or Putin, or Saudi Arabia, or Syria or... But what if it were something completely different, known only by top people at the world's biggest banks. And... Continue Reading →

Replication attempts are heating up cold fusion

(This blog post was first published on Animpossibleinvention.com). In just a few weeks, the whole landscape of cold fusion and LENR has changed significantly and, as many have noted, 2015 might bring a breakthrough for LENR in general, with increased public awareness, scientific acceptance and maybe even commercial applications. This is great news. For those who haven't... Continue Reading →

Unconditional basic income might be a brilliant idea

Why would anyone suggest a basic income for everyone, and by the way, would it even be a good idea? Well, here you go: Several studies indicate that machines will be able to to a large part of the jobs humans do today within a few decades, and looking at technology such as the digital... Continue Reading →

Here’s my Youtube channel on technology driven change

http://www.youtube.com/watch?v=_b5TQl-ygGo Technology is changing our world at an accelerating pace, and the change is going faster than most people would think. This is the theme that interests me the most and that I'm passionate about, and it's also the theme that I regularly give talks and seminars on, at conferences and to various institutions, government... Continue Reading →

The second edition of “An Impossible Invention” is out

To everyone who has read my book 'An Impossible Invention' -- thanks for all your support so far! Everyone of you has meant a lot to me, and also all personal messages, emails, reviews, comments, text messages and even phone calls that I have received from all over the world. Now a second edition of the... Continue Reading →

Here is how we could coexist with a superintelligence

The idea of a superintelligence might be frightening. I have touched the subject before and I also discussed why human values (hopefully) might be important to a superintelligence. But we don't know. Professor Nick Bostrom at the Future of Humanity Institute at Oxford University believes that superintelligence could put all humanity at risk, and he's... Continue Reading →

Amelia understands what you say, and acts

Amelia is a new hire at a call center. She answers in two seconds, solves the problem in four minutes instead of an average of 15 minutes, and customers are quite happy. Amelia never goes home. Amelia is what might be the most advance artificial intelligence so far on Earth. Developed by US based Ip Soft that normally... Continue Reading →

Interview on radio show Free Energy Quest tonight

Tonight, Thursday October 9, I'll be interviewed by Sterling Allan on his radio show Free Energy Quest, at 3 pm PST, midnight Central European Time (CET). You can listen live here. We will be talking about my book An Impossible Invention, and about the recent third party report on the E-Cat. The show will be... Continue Reading →

New scientific report on the E-Cat shows excess heat and nuclear process

This blog post was originally published on Animpossibleinvention.com. A new scientific report on the E-Cat has been released, providing two important findings from a 32-day testrun of the reactor -- together leading to the clear conclusion that the E-Cat is an energy source based on some kind of nuclear reaction, without radiation outside the reactor.... Continue Reading →

An Impossible Invention on Amazon — second edition upcoming

(This blog post was originally published on Animpossibleinvention.com) Lots of people have asked me to make ‘An Impossible Invention’ available on Amazon in order to reach a broader audience. So I did — now there’s an e-book version in Amazon’s Kindle format listed here. The paperback version will get there later for a simple reason:... Continue Reading →

Artificial baby mind learns to talk

In the last months I have been immersed in exciting projects, while also keeping up with how the story told in my book An Impossible Invention continues to evolve. There's been so much on the theme of The Biggest Shift Ever that I would have liked to share in blog posts, so much fascinating science... Continue Reading →

Swedish National Radio paints it black

(This blog post was originally posted on Animpossibleinvention.com) The scientific newsroom of Sveriges Radio, the national Swedish Radio, has dedicated four months of research and a whole week of its air time to the story of Andrea Rossi, the E-Cat and cold fusion (part 1, 2, 3, 4), and I’m honored that it has made me one of its main targets.... Continue Reading →

Defkalion demo proven not to be reliable

(This blog post was originally posted on Animpossibleinvention.com) The measurement setup that was used by Defkalion Green Technologies (DGT) on July 23, 2013, in order to show in live streaming that the Hyperion reactor was producing excess heat, does not measure the heat output correctly, and the error is so large that the reactor might not... Continue Reading →

A few more researchers who were never recognized

(This blog post was originally posted on Animpossibleinvention.com) As those of you who have already read my book 'An Impossible Invention' know, it's written in memory of Martin Fleischmann (1927 – 2012), Sergio Focardi (1932 – 2013) and Sven Kullander (1936 – 2014). All these three persons were important for my work, and they all left... Continue Reading →

Here’s my book on cold fusion and the E-Cat

(This blog post was originally posted on Animpossibleinvention.com) For three difficult years I have experienced much that I wanted to discuss, that I had thought people would want to investigate and understand better. Yet reaching out has been difficult for me. I want you, the reader, to comprehend, forgive and then participate. The term 'cold fusion' is so... Continue Reading →

Google’s goal: To control the world’s data

In 2013, Google acquired eight companies specializing in robotics, and many have asked what Google will do with all those robots. The eighth company wa s Boston Dynamics, which through funding by DARPA has developed a couple of high-profile animal-like robots and the two-legged humanoid Atlas. A week after that acquisition, Google became the world's... Continue Reading →

Survival of the Fittest Technology

I already outlined the ideas of author and entrepreneur Ray Kurzweil, currently Engineering Director at Google, on exponentially accelerating technological change. His ideas are based on what he calls the Law of Accelereting Returns -- the fairly intuitive suggestion that whatever is developed somewhere in a system, increases the total speed of development in the whole... Continue Reading →

Issue number 3 of Next Magasin focusing on cyborgs

We just released a fresh issue of the Swedish forward looking digital magazine Next Magasin, for which I am the managing editor, this time focusing on cyborgs. The main feature reportage by journalist Siv Engelmark is a fascinating journey through the aspects of our use of technology to make humans into something more than humans.... Continue Reading →

Here are three good reasons to have a look at cold fusion

Ever since Martin Fleischmann and Stanley Pons presented their startling results in 1989, claiming that they had discovered a process that generated anomalously high amount of thermal energy, possibly through nuclear fusion at room temperature, cold fusion has been rejected by the mainstream scientific community. For anyone open to believe the contrary, here are three good reasons... Continue Reading →

Update on Defkalion’s reactor demo in Milan

(This update comes a little bit late, I apologize for that). Defkalion's reactor demo in Milan in July has been discussed extensively. A series of concerns have been raised, among them for the flow measurement not being accurate and for the flow of steam output into the sink being weaker than what could be expected.... Continue Reading →

Thinking, fast and slow, pattern recognition and super intelligence

This summer's reading has been Thinking, Fast and Slow by the Israeli-American psychologist and winner of Nobel Memorial Prize in Economic Sciences, Daniel Kahneman. Great reading (although a little heavy to read from start to end in a short time). The book describes the brain's two ways of thinking -- the faster, more intuitive and emotional... Continue Reading →

Comments on Defkalion reactor demo in Milan

Yesterday I participated as an observer at the Greek-Canadian company Defkalion's demo of its LENR based energy device Hyperion in Milan, Italy. The device is just like Andrea Rossi's E-Cat, loaded with small amounts of nickel powder and pressurized with hydrogen, and supposedly produces net thermal energy through a hitherto unknown process that seems to... Continue Reading →

Prof Sergio Focardi dead at 80

Sergio Focardi, emeritus professor in experimental physics at the University of Bologna, passed away the night between Friday 21st and Saturday 22nd of June, 80 years old, after a long illness. Focardi was born on July 6, 1932, in Firenze, Italy. He was Dean of the Faculty of Mathematical, Physical and Natural Sciences from 1980 to... Continue Reading →

Update of Swedish-Italian report, and Swedish pilot E-Cat customer wanted

The report on energy measurements on the E-Cat by a Swedish-Italian group of scientists has been updated with an appendix explaining more in detail the measurements of input electric power. The new version of the report can be found here. It has been discussed whether a DC current could have been drawn through the power... Continue Reading →

Criticism, praise and comments on the Swedish-Italian E-Cat report

An earlier critic of energy measurements on the E-Cat so far, Swedish nuclear physicist Peter Ekström, has published his comments on the recent Swedish-Italian report on indications of anomalous heat production in the E-Cat. Ekström's comments, which can be found here, focus on a number of issues, ranging from calibration of the input power measurements... Continue Reading →

Two 100 hour scientific tests confirm anomalous heat production in Rossi’s E-Cat

A group of Italian and Swedish scientists from Bologna and Uppsala have just published their report on two tests lasting 96 and 116 hours, confirming an anomalous heat production in the energy device known as the E-Cat, developed by the Italian inventor Andrea Rossi. The report is available for download here and on Arxiv.org. I have... Continue Reading →

An upcoming opportunity – what to do with dead shopping malls

Here's an great upcoming opportunity for anyone who is creative and wants to be at the forefront when the timing is right: What to do with all those huge shopping malls that within a decade will remain empty and outdated. Taking into account that these enormous volumes are still being built all over the world underlines the... Continue Reading →

Suppose Google plans to create a mind

I'm reading the latest book by Ray Kurzweil -- How to Create a Mind (2012). In his book Kurzweil pulls together different pieces of cutting edge brain research and puts them in the context of his own experience of developing technology for voice understanding and character recognition. The result is the Pattern Recognition Theory of... Continue Reading →

Imagine hi res trading replace crowdfunding and VC:s

One main aspect of digital revolution is that it opens completely new business models and mechanisms in an industry, such as Spotify's model with streaming music as opposed to downloading and owning music files or even cd:s. In an earlier post I selected six industries that will be hit next by digital revolution, and one of them... Continue Reading →

Swedish TV on Andrea Rossi and the E-cat

Tonight Swedish Television, SVT, dedicated 25 minutes of the program "The World of Science" (Vetenskapens Värld) to the Italian inventor Andrea Rossi and his controversial energy device, the E-cat. The program is online until January 16 on this link: http://www.svtplay.se/video/917953/del-14 (the part on Rossi starts at 24:30 minutes). Update: And here's an English transcript. I believe SVT... Continue Reading →

A really good post on ethics for machines, robots, cars…

Here's a really good piece on the difficulty but also the importance of ethics for machines, robots, autonomous cars, arms and similar stuff powered by artificial intelligence: Moral Machines by Gary Marcus, Professor of Psychology at N.Y.U. Prof Marcus argues that the moment autonomous cars will be so much better and safer than human drivers that we... Continue Reading →

What’s the size of an artificial mind?

Now this is one question that has intrigued me for some time, and I think I have a good and quite simple answer. I've already made it quite clear that I believe that we will be able to create human like artificial intelligence -- strong AI -- within a couple of decades, and that there will be... Continue Reading →

What if we make animals as smart as humans?

Recently a group of reserachers reported that they had designed a brain implant that sharpened decision making and restored lost mental capacity in monkeys. Even if that's a very interesting piece of research (you can read NY Times report here) it's of course lightyears from making animals intelligent. Still one bizarre idea immediately struck me. I... Continue Reading →

Exploring ethics for machines

“If we admit the animal should have moral consideration, we need to think seriously about the machine.” That's how Northern Illinois University Professor David Gunkel puts it, discussing whether and to what extent intelligent and autonomous machines that we are devloping can be considered to have legitimate moral responsibilities and any legitimate claim to moral treatment. In his new book The... Continue Reading →

Six industries that will be hit by digital revolution

Some people in the music industry still wonder what happened when an established and profitable business model was torn to pieces in a few years. Well, soon a lot more people in several other industries will ask themselves the same thing. I just outlined six industries that will be hit in the next future in... Continue Reading →

We just launched Next Magasin – on how technology’s changing the world

A few days ago we finally launched Next Magasin – a new magazine on how technology's changing the world (just to be clear, it's only in Swedish for now). I'm the managing editor and it's been a great time to work with the first issue, which is free to download or to read on Ipad/Android/Pc.... Continue Reading →

Forget the digital divide

The concept of The Digital Divide – the haves and the have nots in the digital era – has been firmly established and taken for granted for at least a decade. Therefore it was very refreshing to read Christopher Mims' post in Technology Review, entitled "There's no Digital Divide". It's basically an interview with Jessie Daniels,... Continue Reading →

Watch out for humans pushed to the edge

I just read a piece in Wired Magazine on high school students having debates at 350 words per minute. And it came to my mind that in history of technology it's a well known fact that when a technology is at its last stages, just before being widely replaced by a better and more versatile... Continue Reading →

What would it be like to be super intelligent?

Have you ever considered the immediate images appearing in your mind when you hear the word super intelligence? Maybe an alien with a huge cranium staring at you... or a computer controlling every step you take...? Or have you ever tried to imagine what such a super intelligence actually thinks of you? It might turn... Continue Reading →

Defkalion posts job listing for 21 professionals

I just noted that Greek Defkalion recently posted a job listing, looking for 21 professionals, mostly engineers. The job listing hints at progress towards industrial production of Defkalion’s Hyperion product, supposedly based on an LENR process and a potential competitor with the E-cat, a similar product developed by Andrea Rossi. According to a letter sent... Continue Reading →

The Greek government in test of Defkalion’s technology

Representatives of the Greek government on Tuesday assisted at a test of Defkalion's energy technology – a potential competitor of Andrea Rossi's 'E-cat.' Meanwhile, Rossi continues to develop his technology. No results were presented. Another six groups are expected to perform independent testing of Defkalion's technology in the upcoming weeks. My report on NyTeknik.se can... Continue Reading →

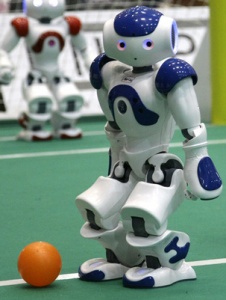

Will robots beat human champions in soccer by 2050?

As you might know, the official goal of the robotics competition Robocup is to field a team of robots capable of winning against the human soccer World Cup champions by 2050. At Nyteknik.se we recently made a poll among our readers – most of them professional engineers – whether this is likely to happen or not.... Continue Reading →

Did you ever wonder what technology really wants?

Kevin Kelly, writer and founding editor of Wired Magazine, did. And he put down the answer really well in his book “What Technology Wants” published in 2010. It’s still really worth reading, giving inspiration to anyone who wants to gain understanding on how we should shape technology to do more good and less harm. If... Continue Reading →

Five industries where 2 billion jobs will be lost

Most people have understood that the music and the movie industries have been profoundly changed by the internet. Fewer realize that this was just the beginning. Futurist Thomas Frey recently talked on how 2 billion jobs will disappear by 2030 and also outlined five areas in which this will happen: 1. Power Industry 2. Automobile transportation 3. Education... Continue Reading →

Autonomous cars highlight fundamental questions

I like the recent call from Molly Wood at Cnet News (where I used to work in 2009): “Self-driving cars: Yes, please! Now, please!”. She notes quite obvious advantages with autonomous cars – safety, efficiency and environmental improvements – and observes that the forces working against adoption are fear and love of driving, emotions so... Continue Reading →

Defkalion offers testing of cold fusion reactors

The Greek company Defkalion has invited scientific and business organizations to test the core technology in its forthcoming energy products. The products are based on LENR – Low Energy Nuclear Reactions. Read my report at Nyteknik.se here.

Humanoids getting recent mainstream interest

"Frank & Robot" is a new movie at the Sundance Film Festival 2012 in Park City, Utah. It's directed by Jake Schreier and is about an old ex-thief being taken care of by a robot nurse. And a couple of days ago the first episode of the Science Fiction TV Series "Äkta människor" (Real People), produced... Continue Reading →

Abundance – The future is better than you think

For everyone interested in the powers of contemporary and future technology development and its potential to change the world into something better, there's a new book that seems interesting to read: "Abundance: The Future Is Better Than You Think" by Peter H. Diamandis. Peter H. Diamandis is chairman and CEO of the X-Prize Foundation, which has defined... Continue Reading →

Why Copyright and Privacy will be Battlefields

Don’t think that what we’re seeing with the proposed U.S. internet legislation called SOPA and PIPA is a onetime phenomenon – a battle that might be won or lost. Discussions and battles on Copyright and Intellectual Property will be a main ground for conflicts and debate in the coming decades. Another main area for long... Continue Reading →

Why human values will be fundamental for a super intelligence

What do the ancient Greeks or fight for civil rights have to do with the destiny of the universe? More than you could believe. Or rather – they are a fundamental part of it. The short explanation is that without the experience gained through thousands of years of human civilization, a super intelligence wouldn't have... Continue Reading →

The E-cat, Cold Fusion and LENR

In the last year, lots of people have found my reports on the ‘E-cat’ and Cold Fusion or LENR. For those who haven’t heard about this, it seems to be new and very flexible energy source, potentially based on a new kind of nuclear reaction different from fission (nuclear plants) and fusion (the sun), with... Continue Reading →

2012 is a good year to start a blog

2012 is a good year to start a blog on a major transformation for humanity and of the world. Several theories based on the Mesoamerican Long Count calendar suggest that Dec 21, 2012, will either mark the beginning of a new era, or the end of the world. This blog has basically nothing to do... Continue Reading →